Introduction to deepfake

Deepfake is a type of artificial intelligence-based technology that uses machine learning algorithms, particularly generative adversarial networks (GANs), to generate synthetic media such as images, videos, and audios. The goal of deepfake technology is to create highly realistic synthetic media that resembles real people, but with some aspect of the content manipulated. Deepfake technology1 is based on two techniques, namely, deep learning and generative adversarial networks. Deep learning is a subfield of machine learning that uses algorithms inspired by the structure and function of the brain, known as artificial neural networks, to process and analyse large amounts of data. Deep learning has been applied to a wide range of applications such as computer vision, natural language processing, speech recognition, and robotics. Generative adversarial networks (GANs) are a type of deep learning architecture that use two neural networks, a generator, and a discriminator, to train on a dataset and generate new, synthetic data that resembles the original data. The generator creates fake samples while the discriminator assesses the authenticity of the generated samples and the real samples from the training dataset. The two networks are trained in an adversarial manner, where the generator tries to generate samples that can fool the discriminator, while the discriminator tries to correctly distinguish the generated samples from the real ones. This process continues until the generator is able to produce highly realistic synthetic data.

Illustration: Face-to-face method2

Creation of deepfakes

Deepfakes are created using a machine learning technique known as generative adversarial networks (GANs). A GAN consists of two neural networks, a generator, and a discriminator, that are trained on a large dataset of real images, videos, or audio. The generator network creates synthetic data, such as a synthetic image, that resembles the real data in the training set. The discriminator network then assesses the authenticity of the synthetic data and provides feedback to the generator on how to improve its output. This process is repeated multiple times, with the generator and discriminator learning from each other, until the generator produces synthetic data that is highly realistic and difficult to distinguish from the real data. This training data is used to create deepfakes which may be applied in various ways for video and image deepfakes:

(a) face swap: transfer the face of one person for that of the person in the video;

(b) attribute editing: change characteristics of the person in the video e.g. style or colour of the hair;

(c) face re-enactment: transferring the facial expressions from the face of one person on to the person in the target video; and

(d) fully synthetic material: Real material is used to train what people look like,but the resulting picture is entirely made up.

Detection of deepfakes

It is important to note that deepfake technology is constantly evolving and improving, so deepfake detection techniques need to be regularly updated to keep up with the latest developments. Currently, the best way to determine if a piece of media is a deepfake is to use a combination of multiple detection techniques and to be cautious of any content that seems too good to be true. Here are some of the most common techniques used to detect deepfakes:

Visual artifacts. — Some deepfakes have noticeable visual artifacts3, such as unnatural facial movements or blinking, that can be a giveaway that the content is fake. Visual artifacts in deepfakes can arise due to several factors, such as limitations in the training data, limitations in the deep learning algorithms, or the need to compromise between realism and computational efficiency. Some common examples of visual artifacts in deepfakes include unnatural facial movements or expressions, unnatural or inconsistent eye blinking, and mismatched or missing details in the background.

Audio-visual mismatches. — In some deepfakes, the audio and visual content may not match perfectly, which can indicate that the content has been manipulated. For example, the lip movements of a person in a deepfake video may not match the audio perfectly, or the audio may contain background noise or echoes that are not present in the video4. These types of audio-visual mismatches can be a sign that the content has been manipulated.

Deep learning-based detection. — Deep learning algorithms, such as deep neural networks, can be used to detect deepfakes by training the algorithms on a large dataset of real and fake images, videos, or audios. The algorithm learns the patterns and artifacts that are typical of fake content, such as unnatural facial movements, inconsistent eye blinking, and audio-visual mismatches.Once the deep learning algorithm has been trained, it can be used to detect deepfakes by analysing new, unseen media. If the algorithm detects that a piece of media is fake, it can flag it for manual inspection or flag it for further analysis5.

Forensics-based detection. — Forensics-based detection methods involve analysing various aspects of an image or video, such as the geometric relationships between facial features, to determine whether the content has been manipulated or not.6 These methods can be effective in detecting deepfakes, but they are not foolproof. Deepfake creators are constantly improving their techniques to make the forgeries more convincing, so forensic methods need to be constantly updated to keep up.In addition to analysing facial features, other forensic methods include analysing patterns in audio and video data, and examining inconsistencies in lighting, shading, and other visual cues. These methods can help identify deepfakes, but they are not a guarantee, and there is always a possibility that a sophisticated deepfake may go undetected.

Multi-model ensemble. — The use of a multi-model ensemble is a popular approach in deepfake detection as it can provide more accurate results compared to using a single model7. By combining the outputs of multiple deepfake detection methods, a multi-model ensemble can provide a more comprehensive evaluation of the authenticity of an image or video.For example, one model might focus on analysing the geometric properties of facial features, while another might focus on detecting inconsistencies in lighting and shading. By combining the results from these models, a multi-model ensemble can provide a more robust evaluation of the authenticity of the content.

Offences Caused Using Deepfakes

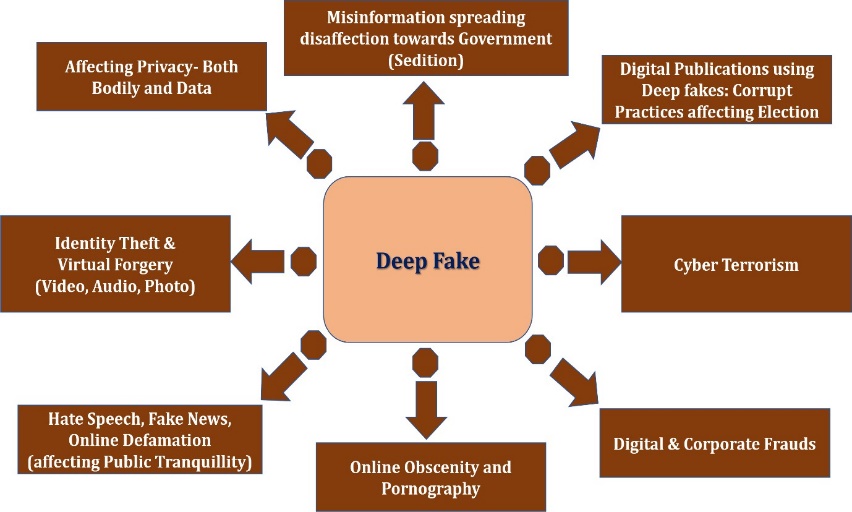

Illustrations of various crimes committed using deepfakes

Offences committed by using deepfakes

There is a plethora of possibility of commission of crimes using the technology of deepfake. The technology itself does not pose a threat, however it can be used as a tool to commit crimes against individuals and society. The following crimes can be committed using deepfake:

Identity theft and virtual forgery. — Identity theft and virtual forgery using deepfakes can be serious offences and can have significant consequences for individuals and society as a whole. The use of deepfakes to steal someone’s identity, create false representations of individuals, or manipulate public opinion can cause harm to an individual’s reputation and credibility, and can spread misinformation. Under Section 668 computer-related offences) and Section 66-C9 (punishment for identity theft) of the Information Technology Act, 2000 these crimes can be prosecuted. Also, Sections 42010 and 46811 of the Penal Code, 1860 could be invoked in this regard.

Misinformation against Governments. — The use of deepfakes to spread misinformation, subvert the Government, or incite hatred and disaffection against the Government is a serious issue and can have far-reaching consequences for society. The spread of false or misleading information can create confusion and undermine public trust and can be used to manipulate public opinion or influence political outcomes. Under Section 66-F12 (cyber terrorism) and the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Amendment Rules, 202213 of the Information Technology Act, 200014 these crimes can be prosecuted. Also, Section 12115 waging war against the Government of India) and Section 124-A16 of the Penal Code, 1860 could be invoked in this regard.

Hate speech and online defamation. —Hate speech and online defamation using deepfakes can be serious issues that can harm individuals and society as a whole. The use of deepfakes to spread hate speech or defamatory content can cause significant harm to the reputation and well-being of individuals and can contribute to a toxic online environment. Under the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Amendment Rules, 2022 of the Information Technology Act, 2000 these crimes can be prosecuted. Also, Sections 153-A17 and 153-B18 (Speech affecting public tranquility) Section 49919 (defamation) of the Penal Code, 1860 could be invoked in this regard.

Practices affection elections. — The use of deepfakes in elections can have significant consequences and can undermine the integrity of the democratic process. Deepfakes can be used to spread false or misleading information about political candidates and can be used to manipulate public opinion and influence the outcome of an election.The impact of deepfakes on elections is a growing concern, and many Governments and organisations are taking steps to address this issue. Under Section 66-D20 (punishment for cheating by personation by using computer resource) and Section 66-F21 (cyber terrorism) of the Information Technology Act, 2000 these crimes can be prosecuted. Also, Sections 123(3-A)22, 123 and 12523 of the Representation of the People Act, 1951 and Social Media Platforms and Internet and Mobile Association of India (IAMAI), today presented a “Voluntary Code of Ethics for the General Election, 2019 could be invoked to tackle the menace affecting elections in India.

Violation of privacy/obscenity and pornography. — This technology can be used to create fake images or videos that depict people doing or saying things that never actually happened, potentially damaging the reputation of individuals, or spreading false information. It is also possible for deepfakes to be used for malicious purposes such as non-consensual pornography, or for political propaganda or misinformation campaigns. This can have serious implications for individuals whose images or likenesses are used without their consent, as well as for society at large when deepfakes are used to spread false information or manipulate public opinion. Under Section 66-E24 (punishment for violation of privacy), Section 6725 (punishment for publishing or transmitting obscene material in electronic form), Section 67-A26 (punishment for publishing or transmitting of material containing sexually explicit act, etc. in electronic form), Section 67-B27 (punishment for publishing or transmitting of material depicting children sexually explicit act/pornography in electronic form) of the Information Technology Act, 2000 these crimes can be prosecuted. Also, Sections 29228 and 29429 (Punishment for sale etc. of obscene material) of the Penal Code, 1860 and Sections 1330, 1431 and 1532 of the Protection of Children from Sexual Offences Act, 2012 (POCSO) could be invoked in this regard to protect the rights of women and children.

Conclusion and suggestions

The current legislation in India regarding cyber offences caused using deepfakes is not adequate to fully address the issue. The lack of specific provisions in the IT Act, 2000 regarding artificial intelligence, machine learning, and deepfakes makes it difficult to effectively regulate the use of these technologies. In order to better regulate offences caused using deepfakes, it may be necessary to update the IT Act, 2000 to include provisions that specifically address the use of deepfakes and the penalties for their misuse. This could include increased penalties for those who create or distribute deepfakes for malicious purposes, as well as stronger legal protections for individuals whose images or likenesses are used without their consent.

It is also important to note that the development and use of deepfakes is a global issue, and it will likely require international cooperation and collaboration to effectively regulate their use and prevent privacy violations.In the meantime, it is important for individuals and organisations to be aware of the potential risks associated with deepfakes and to be vigilant in verifying the authenticity of information encountered online. In the meantime, the Governments can do the following:

(a) First, is the censorship approach of blocking public access to misinformation by issuing orders to intermediaries and publishers.

(b) Second approach is the punitive approach which imposes liability on individuals or organisations originating or disseminating misinformation.

(c) The third approach is the intermediary regulation approach, which imposes obligations upon online intermediaries to expeditiously remove misinformation from their platforms, failing which they could incur liability as stipulated under Sections 69-A33 and 7934 of Information Technology Act, 2000.

† Teaching and Research Assistant, School of Law-Forensic Justice and Policy Studies, National Forensic Sciences University, Gandhinagar, India. Author can be reached at shubham.pandey@nfsu.ac.in.

†† Assistant Professor, School of Law-Forensic Justice and Policy Studies, National Forensic Sciences University, Gandhinagar, India. Author can be reached at gaurav.jadhav@nfsu.ac.in.

1. Jia Wen Seow, et al., “A Comprehensive Overview of Deepfake: Generation, Detection, Datasets and Opportunities”, 513 Science Direct 351-371 (2022) <https://doi.org/10.1016/j.neucom.2022.09.135> accessed on 6-2-2023.

2. J. Thies et al., Face2Face: Real-Time Face Capture and Reenactment of RGB Videos (CVPR 2016, Las Vegas, June 2016) <https://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/Thies_Face2Face_Real-Time_Face_CVPR_2016_paper.pdf> accessed on 6-2-2023.

3. Conference on Computer Vision and Pattern Recognition Workshops, 2020, accessed on 10-3-2022 <https://www.ohadf.com/papers/AgarwalFaridFriedAgrawala_CVPRW2020.pdf> accessed on 8-2-2023.

4. Yipin Zhou and Ser-Nam Lim, Joint Audio-Visual Deepfake Detection, Computer Vision Foundation 2022 <https://openaccess.thecvf.com/content/ICCV2021/papers/Zhou_Joint_Audio-Visual_Deepfake_Detection_ICCV_2021_paper.pdf> accessed on 8-2-2022.

5. Agarwal, S. et al., Protecting World Leaders Against Deep Fakes, Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, pp. 38-45, 2019, accessed on 10-3-2022<http://www.hao-li.com/publications/papers/cvpr2019workshopsPWLADF.pdf> accessed on 9-2-2023.

6. Samuel H. Silva, et al., “Deepfake Forensics Analysis: An Explainable Hierarchical Ensemble of Weakly Supervised Models”, 4 Science Direct 2022 <https://doi.org/10.1016/j.fsisyn.2022.100217> accessed on 9-2-2023.

7. Abdulhameed Al Obaid, et al., “Multimodal Fake-News Recognition Using Ensemble of Deep Learners”, 24 Entropy 1242 (2022) <https://doi.org/10.3390/e24091242> accessed on 9-2-2023.

8. Information Technology Act, 2000, S. 66.

9. Information Technology Act, 2000, S. 66-C.

12. Information Technology Act, 2000, S. 66-F.

13. Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Amendment Rules, 2022.

14. Information Technology Act, 2000.

16. Penal Code, 1860, S. 124-A.

17. Penal Code, 1860, S. 153-A.

18. Penal Code, 1860, S. 153-B.

20. Information Technology Act, 2000, S. 66-D.

21. Information Technology Act, 2000, S. 66-F.

22. Representation of the People Act, 1951, S. 123(3-A).

23. Representation of the People Act, 1951, S. 125.

24. Information Technology Act, 2000, S. 66-E.

25. Information Technology Act, 2000, S. 67.

26. Information Technology Act, 2000, S. 67-A.

27. Information Technology Act, 2000, S. 67-B.

30. Protection of Children from Sexual Offences Act, 2012, S. 13.

31. Protection of Children from Sexual Offences Act, 2012, S. 14.

32. Protection of Children from Sexual Offences Act, 2012, S. 15.

Great article! It’s crucial to address the legal implications of emerging technologies like deepfakes in India.